On August 6, 2023, Stack Diary published an article highlighting that Zoom included clauses in its Terms of Service (ToS) with far-reaching implications for personal data collection and use.

Specifically, parts of Zoom’s ToS implied that they used personal information to train machine learning and artificial intelligence (AI) models.

Not only did this appear to breach several European Union laws, but it also spread rapidly, sparking a massive public outcry.

Zoom responded with a blog post and modified their ToS twice, but we still have some questions.

Let’s dive into this Zoom terms of service controversy in more detail.

Why Was The Zoom Terms of Service Controversial?

When you make a Zoom account, according to the introductory text in their terms of service agreement, you’re agreeing to and are legally bound by all of their terms and conditions.

They state in bold letters:

“Your acceptance of this Agreement creates a legally binding contract between you and Zoom.”

But on August 6, Stack Diary exposed parts of Zoom’s terms, implying they used personal data in ways that possibly breached laws like the General Data Protection Regulation (GDPR).

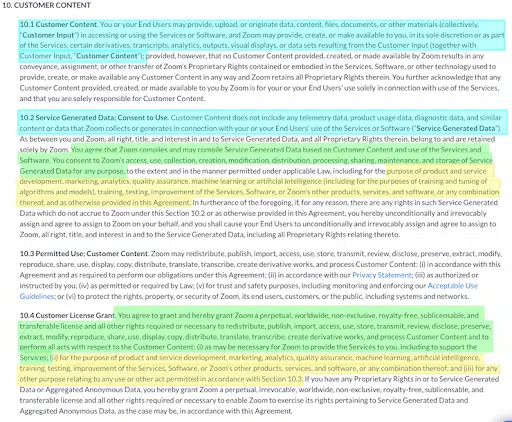

Below is a screenshot of the Zoom ToS as it appeared on August 6, recovered from archive.org.

The highlighted colors I added represent different legal requirements regarding the GDPR that I’ll reference throughout this article:

- Blue: Zoom’s definition of Customer Content Data and Service Generates Data

- Green: Zoom’s lawful basis used to justify the processing of Customer Content Data and Service Generated Data

- Yellow: Zoom’s purpose for processing both Customer Content Data and Service Generated Data

The yellow highlighted section of part 10.4 of this Terms of Service stated that they may use information from users to train their AI and other machine learning modules, like Zoom IQ.

Two paragraphs above, in the green section of 10.2, Zoom claimed consent as their legal basis for collecting the specific data types.

There was just one problem — Zoom never actually obtained legal opt-in consent from users in the first place, a direct breach of Article 4 of the GDPR.

In the next section, let’s discuss how this breached EU data privacy laws in greater detail.

Zoom’s ToS Possible Breach of the GDPR

The initial version of Zoom’s ToS defined two types of data:

- Customer Content (defined in section 10.1)

- Service Generated Data (defined in section 10.2)

See the exact definitions in the blue highlighted sections in the screenshot I shared above.

But essentially, Customer Content represented customers’ and end users’ data generated using Zoom’s platform that legally qualifies as personal information.

It included information like data, content, files, documents, and other materials users may upload or provide while using Zoom’s services.

In contrast, the ToS defined Service Generated Data as:

‘… any telemetry data, product usage data, diagnostic data, and similar content or data that Zoom collects or generates in connection with your or your End Users’ use of the Services or Software’.

Also called metadata, this type of information can include personal data defined by Article 4 of the GDPR, as upheld by recent court cases, such as the Digital Rights Ireland case.

Following these definitions, there was a list of purposes for which Zoom may use such data, which included ‘product and service development’, ‘marketing’, and, notably, ‘machine learning and artificial intelligence’.

However, according to Article 4 of the GDPR, legal consent means:

‘any freely given, specific, informed and unambiguous indication of the data subject’s wishes by which he or she, by a statement or by a clear affirmative action signifies agreement to the processing of personal data relating to him or her’.

Zoom’s August 6 ToS in no way adequately followed this legal definition, here’s why:

- Section 10.2 of its ToS bundled a list of purposes to which it claimed the user consented, in which case consent could not be considered ‘specific’.

- Zoom never asked the user to ‘affirmatively’ provide their consent through an action (in the data privacy world, this is called opt-in consent).

- Finally, one can argue that consent in this scenario couldn’t be ‘freely given’, as any user who refused had only one alternative — not using Zoom’s services (TechCrunch).

Zoom’s Not-So-Necessary Performance of a Contract

Technically, the performance of a contract is a specific lawful basis for processing data as defined by the GDPR.

The GDPR enables a controller to process personal data as ‘necessary for the performance of a contract.’

Could this apply to Zoom?

To use a second point highlighted by Stack Diary’s article, Zoom’s Section 10.4 secured a “perpetual, worldwide, non-exclusive, royalty-free, sub-licensable, and transferable license…” on any use of Customer Content data.

Zoom justified this as ‘necessary to provide the services’, including supporting and improving its services, marketing, analytics, quality assurance, machine learning, and artificial intelligence.

In other words, they’re processing Customer Content to perform a contract.

But in this case, the purposes listed by Zoom would very likely not qualify as necessary — marketing, analytics, machine learning, and artificial intelligence are not essential to Zoom’s communication services.

Zoom’s ToS Possible Breach of the ePrivacy Directive

As a result of breaching GDPR’s Article 4 definition of consent, Zoom would also breach another EU Law called the ePrivacy Directive.

Specifically, Article 5 of the ePrivacy Directive prohibits:

“listening, tapping, storage or other kinds of interception or surveillance of communications and the related traffic data by persons other than users, without the consent of the users concerned…”

The ePrivacy Directive uses the same legal definition of consent as the GDPR, and as I’ve already explained, Zoom did not adequately meet the guidelines regarding opt-in consent.

How Did Zoom Respond?

After the snowball effect of bad press, Zoom quickly updated its terms of service within 24 hours and posted a blog post responding to the controversy.

However, the changes were still inadequate, leading to a third update that left us with several questions and concerns.

In this next section, I’ll cover both versions of their ToS and explain why it’s still unsettling.

An Unsuccessful First Attempt At Compliance

After the adverse reactions from consumers and the press, Zoom quickly updated its ToS on August 7, 2023.

At the end of Section 10.4, they added a brief statement that specified the following:

“Notwithstanding the above, Zoom will not use audio, video, or chat Customer Content to train our artificial intelligence models without your consent.”

For posterity’s sake, see a screenshot of this short-lived version of the Zoom ToC below, once again recovered from archive.org.

![]()

While this was meant to clarify the situation, it actually posed new issues.

Firstly, this statement only applies to Customer Content.

As a result, the collection of Service Generated Data based on Zoom’s unlawful definition of consent would remain noncompliant with EU privacy laws.

Secondly, this statement implies that Zoom’s processing of Customer Content would not rely on the lawful GDPR basis of ‘Performance of the Contract’ (which was impossible to begin with).

Instead, they explicitly rely on consent, which we’ve already established is invalid.

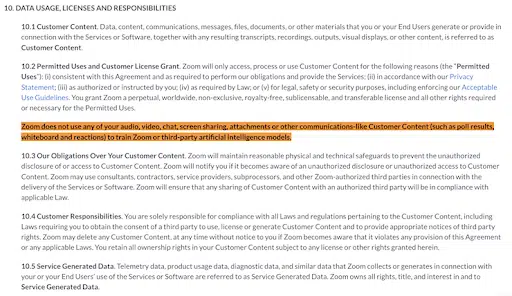

Zoom’s Current Terms of Service and The Issue of Metadata

The third time’s the charm, right? Well, maybe not so much in the case of the Zoom ToS.

On August 11, 2023, Zoom updated its terms again, finally backing off on Artificial Intelligence.

Below, you can see a screenshot of the current version of Section 10.

Let’s quickly cover what changed.

First, you’ll notice the section is much shorter overall. There’s no more mention of using your data to assist with machine learning and artificial intelligence other than the bolded text from Section 10.2 that reads:

‘Zoom does not use any of your audio, video, chat, screen sharing, attachments or other communications-like Customer Content (such as poll results, whiteboard, and reactions) to train Zoom or third-party artificial intelligence models.’

Get used to this sentence, as it now appears twice in their Privacy Policy, which also governs how they access, process, and use personal information from their users.

Additionally, Zoom removed most language about consent and placed the liability on you to inform your “End Users” about any applicable laws requiring you to obtain consent for third-party use.

Though this could be considered a satisfactory resolution for Zoom users who do not wish to have their data used for AI modeling, the issue of Service Generated Data, or metadata, remains.

Metadata can be personal data to the extent it can link back to an individual, and the current terms give Zoom total control over it.

Because metadata can be personal data, these terms remain noncompliant with EU privacy laws like the GDPR and the ePrivacy Directive.

As previously noted, entities under the GDPR should only process this metadata after obtaining opt-in, active consent, not the implied consent that Zoom describes in their updated policy.

The next section is insight from my colleague and fellow data privacy expert, Masha Komnenic, who has more to say on the current version of Zoom’s Terms of Service.

Examining Zoom’s Data Usage for IQ Features Raises Privacy Concerns

Section 10.1 of Zoom’s Terms of Service defines “Customer Content” as encompassing various forms of data and materials generated or provided by users in connection with their services.

However, a closer analysis of their data usage practices for providing Zoom IQ features raises several privacy concerns that deserve attention.

Let’s discuss these issues further.

Potential Privacy Breach via Metadata and Personal Data

While Zoom specifies that it doesn’t use actual audio, video, chat, or other communication content for training its AI models, the company’s emphasis on “Customer Content” creates a loophole that could result in privacy concerns.

Zoom asserts that their data falls outside personal data, which may not be valid.

Even metadata associated with customer content can contain sensitive and personal information, and it’s concerning that Zoom doesn’t explicitly address this potential for privacy violations.

Moreover, the necessity of collecting such data for training AI models, especially when publicly available data can serve the purpose, raises valid questions about their data collection practices.

Third-Party AI Models and Data Retention

The Zoom IQ features also utilize third-party AI models, which adds more complexity to the platform.

As per the terms, third parties may temporarily hold onto customer data for “trust and safety” or “legal compliance purposes”.

But this could lead to potential surveillance concerns, particularly regarding gathering conversation intelligence.

The current clause suggests that third parties may share data with the US government if required, creating a possible avenue for privacy intrusion without clear limitations or redress measures.

The Importance of Being Vigilant Internet Users

In conclusion, while Zoom asserts that it doesn’t directly utilize the contents of user communications for training its AI models, the ambiguities in its terms raise substantial privacy concerns.

The broad categorization of “Customer Content” and the potential for metadata to contain personal data underscore the need for more stringent data protection measures and possibly redefining the scope of Customer Content.

Additionally, the involvement of third-party AI models and the temporary data retention by these entities for various purposes raises valid questions about the security and privacy of user interactions on the platform.

As users, we must be vigilant about how our personal information is processed and push for greater clarity and accountability in such data handling practices.

What We Can Learn From the Zoom ToS Controversy

Zoom taught business owners something fundamental, and it’s a tune we’ve been singing here at Termly for years — consumers care about what you’re doing with their personal data.

You cannot deceive your users or add sneaky paragraphs to your legal policies in hopes that it allows you to collect and process personal data in a way that skirts data protection laws.

Your customers pay attention; if you cross them like Zoom, the consequences are dire.

How can you prove you’re a privacy-literate and respectful company? Here are some tips:

- Post a privacy policy that is accurate, easy to understand, and complies with applicable data privacy laws.

- Make sure the details in your terms of service also follow applicable laws.

- Be honest about what internet cookies you use and what they do, and give your users choices over them.

- Make it easy for your consumers to submit requests to act on their data privacy rights. One way to do this is by using DSAR form.

- Obtain proper opt-in affirmative consent from users (and don’t sneak in any implied consent language).

- Allow your users to easily change their minds and withdraw (or opt back into) consent at any time.

- Have security systems in place to protect the integrity and confidentiality of any personal data you collect, process, and use.

Summary

We’re living in an era where a terms of service scandal can topple the reputation of a behemoth video conferencing platform like Zoom overnight — a service that was once the go-to by virtually everyone online, personally and professionally.

One thing is certain: people read your legal policies.

How you present that information and collect, process, and use personal data matters.

We all use the same internet, so why not do it the right way? As always, Termly is here to help.