Controversies over how data sets train Artificial Intelligence (AI) tools have made the public wary of AI.

A common perception is that AI is collecting any information it can scrape online to train its algorithms, disregarding the privacy of individuals and the requirements of data protection laws.

Public scandals — such as Clearview AI’s illegal scraping of Facebook — have put social media platforms at the forefront of the public’s concerns over AI and data privacy.

In this article, I clarify how Generative AI tools are trained and how publicly available data can be used as part of the process following certain legal and technical requirements.

What Is AI?

Artificial intelligence or AI refers to machines that can perform human-like functions.

There are different types of AI, for example:

- Weak AI performs a narrow range of functions based on fixed patterns it was programmed to detect.

- Strong AI performs complex functions by finding its own patterns in a data set, almost like having a “mind” of its own, and can continue learning independently.

Of course, unlike humans, AI does not have an actual mind like you and I do. Instead, it relies on the types of data sets it’s given.

Also, unlike humans, AI can detect patterns in gigantic amounts of information in a way that we simply cannot.

It uses those patterns to complete its primary function.

For example, when you ask an AI blog writer to create content, it generates its response by accessing a dataset, sometimes containing hundreds of gigabytes, if not terabytes, of text from various sources.

How Are AI Models Trained?

To understand how AI models are trained, it is important to note that recent popularized AI tools qualify as Generative AI (GenAI), including:

- OpenAI’s ChatGPT-3 and 4

- Google’s PaLM 2 and Bard

- GitHub’s CoPilot

GenAI is a branch of AI that specializes in generating content, whether in the form of text, pictures, videos, or code.

It uses algorithms called Large Language Models (LLMs) that need vast amounts of data to learn and train from.

Training an LLM includes an initial training phase, also called the pre-training phase, followed by fine-tuning the model on specific tasks or areas of improvement. Both phases require large amounts of data, ranging in thousands or tens of thousands of examples.

Let’s look at what a few industry leaders say about how they train their GenAI tools.

ChatGPT

Let’s start with ChatGPT, OpenAI’s LLM, which lists the following three categories in its FAQ:

- Publicly available information

- Information licensed by OpenAI from third parties

- Information provided by users or human trainers

Read the screenshot below to see more about how OpenAI claims it trained ChatGPT.

Technically, GPT-3 was trained on 45 terabytes of data, while GPT-4, used by ChatGPT Plus, was estimated to be trained on one petabyte of data.

To give you an idea of how much information this is, one terabyte would represent 500 hours of HD videos, and one petabyte is 1000 terabytes.

Bard

Now, let’s look at how Google’s AI chatbot, Bard, was trained.

They list the following sources:

- Bard conversations

- Related product usage information

- User location

- User feedback

You can read more in the screenshot below from Bard’s privacy notice.

I want to point out how Google references they use data to train Bard consistent with their Privacy Policy, which mentions the following concerning collecting data from publicly available sources for AI training:

“For example, we may collect information that’s publicly available online or from other public sources to help train Google’s AI models and build products and features like Google Translate, Bard, and Cloud AI capabilities. Or, if your business’s information appears on a website, we may index and display it on Google services.”

CoPilot

Finally, let’s read how GitHub uses data to train their AI programmer, CoPilot.

Read more in the screenshot below, which comes from GitHub’s CoPilot features page.

Like OpenAI and Google, GitHub also references relying on publicly available information as the source for its AI training.

The Different Types Of Data Used To Train AI

GenAI needs large amounts of data and uses different sources to train these algorithms — first as a primary resource for its development and then to improve its accuracy and develop its fields of applicability.

From the three examples I just covered, we can surmise that entities use three major categories of data to train AI models.

Publicly Available Data

Most GenAI uses publicly available data for training purposes.

Publicly available data is defined as any information accessible to the public with certain restrictions on how to access, use, and distribute it.

These restrictions often come from the proprietary or intellectual rights over the data held by the entity making the data public.

For example, Facebook’s public posts fall under this definition.

![]()

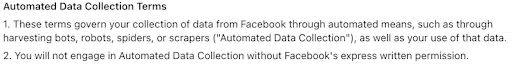

Posts made public by the user are accessible to other parties, but Facebook’s Terms of Service limits its usability and distribution.

More specifically, in the case of AI model training, an automated collection of these posts is limited by their Automated Data Collection Terms, which prevents external parties from collecting it without Facebook’s written permission.

So, while some restrictions exist, its general availability makes it a great resource for AI model training.

As a result, it’s a predominant source for the pre-training phase of AI models, and the most common sources for this information include:

- Web archives: Sites like Common Crawl crawl the web to extract raw page data, metadata, texts, and other accessible resources, archiving them. GPT-3’s pre-training datasets comprised 60% of the Common Crawl corpus.

- Social media’s public content: These platforms represent immense pools of data, giving insights into millions of behaviors and characteristics. Each platform has different terms restricting the data external entities can collect, and they use public data to train their own AI models, like Meta and X/Twitter.

- Open access repositories: Sources like Github’s public code repositories hold research data from public or private entities and provide free and permanent access to datasets for anyone to use.

- Public institutions open databases: Sites like the US National Archives usually include institutional data on various topics, like economics, history, sciences, humanities, and more.

Open Data vs. Publicly Available Data

When defining public data, it is also important to mention open data.

Often used interchangeably, open data is a subcategory of public data characterized by the absence of restrictions on its access, use, and distribution.

Some of the most important sources of open data are governments, which have open data portals, sharing data collected by public institutions.

Open data is usually under an open license, well-structured, and machine-readable.

Product Usage Data

Another data source for training AI models is product usage data, which users generate when using a product.

Because this supposes that a product is already functional, this data source is used during the fine-tuning phase of AI training to provide insights into user behavior and preferences.

You can sort product usage into two categories:

- User content data: Data contained in the content generated by users when they interact with the product and can include texts, images, videos, code, and overall any information the user decides to share with the product.

- Metadata: Data generated in connection with user content data, as in “data about data,” and usually includes details about user content data, such as time of creation, source, size, location, device settings, and more.

But, since this type of data is linked to users, it is considered personal information.

How a product collects and uses this type of data is subject to privacy laws and the companies’ Terms of Services.

Licensed Product Data

Like product usage data, Licensed Product Data is obtained through third parties to which the AI is licensed.

Through a license, a company may use AI models developed and trained by another company to operate within its products.

For example, this is how Microsoft obtained a license to use OpenAI’s Chat GPT-3 technology for its product.

However, it’s difficult to know exactly to what extent Product Usage Data is collected by OpenAI to improve its AI model.

A Word From An Expert

When asked about the implications AI has had on data privacy for businesses and consumers, Anupa Rongala, CEO of Invensis Technologies, said, “AI has transformed data privacy by making security smarter — but also more vulnerable.”

She added, “Businesses now analyze vast datasets in real time, detecting threats faster than ever. At the same time, AI-driven automation increases risks, as algorithms often collect, process, and infer sensitive information without clear user consent.”

“Businesses embracing responsible AI will not only comply with regulations but also build long-term trust.” Anupa Rongala, CEO, Invensis Technologies

To her, the biggest challenges include, “Bias, unauthorized data access, and over-reliance on AI-driven decision-making.”

“Striking a balance is possible — clear governance, human oversight, and ethical AI practices are non-negotiable.

Is Training AI Using Public Data Permitted Under Data Privacy Laws?

Of all the sources used for training AI models, publicly available data constitutes the main resource, firstly because of its availability but also because there are little to no restrictions on access, use, and distribution.

As a result, there has been an increase in the scale and frequency of publicly available data collection since the apparition of GenAI tools.

From a regulatory perspective, publicly available data — to the extent it qualifies as personal information — is less regulated by privacy laws than non-public personal information.

The ‘public’ dimension suggests that at some point, the individual to whom it belongs made it public, renouncing the expectations of privacy.

In this section, I’ll focus on EU and U.S. privacy laws and explain how they define publicly available personal data and what each requires for legal collection.

Privacy Laws in the EU

In the EU, the GDPR does not define publicly available personal data, which means there is no differentiation between publicly available and personal data.

In application, the GDPR applies to any personal data, regardless of its source.

As a result, the notice requirements for controllers outlined by Article 14 regarding the collection of information from sources other than the data subject themselves pertain to the collection of public data under the GDPR.

Notably, a purpose and a lawful basis are necessary for processing said personal data.

What the GDPR Says About Public Data

Though the GDPR doesn’t define public data, it does mention it and requires controllers to disclose whether the data came from publicly available sources in Article 14.

Additionally, Article 9 sets rules that apply to special categories of personal data and seems to limit requirements when it comes to publicly available data.

If the processing relates to personal data manifestly made public by the data subject, no explicit consent or other legal basis as enlisted in Article 9 (mainly specific laws and regulations or establishment, exercise, or defense of legal claims) is required.

So, when it comes to training AI models on publicly available personal data under the scope of the GDPR, we can deduce that a business would have to follow several steps:

- Step One: Choose a lawful basis for training AI models using publicly available data. Whether it’s consent, contract, legitimate interest, or legal obligation, a legal basis should be chosen and its requirements implemented according to the provisions of Article 6.

- Step Two: Inform data subjects that their publicly available personal data has been processed to train AI models, according to Article 14.

- Step Three: Train AI models on special categories of personal data (data revealing racial or ethnic origin, political opinions, religious or philosophical beliefs, etc.) according to the requirements of Article 9, meaning only data manifestly made public by data subjects or where the data subject gives explicit consent, and no other laws prevent it.

Knowing this, it’s noteworthy that the EU has started acting specifically on AI model training.

In March 2023, the Italian Data Protection Authority, the Garante, published an enforcement order against OpenAI.

Among several non-compliance findings about ChatGPT, a key criticism was that OpenAI did not secure a legal basis under Article 6 GDPR to process personal data for initially training the algorithms.

Similarly, in October 2022, France’s CNIL issued an injunction on Clearview AI and fined the company.

Among several GDPR breaches, Clearview AI’s web crawling practices to train its facial recognition software were explicitly found to breach Article 6 lawful basis obligations.

The EU AI Act

Europe is now at the forefront of AI regulation after parliament passed the EU AI Act.

Last year, I spoke to Privacy Attorney Anokhy Desai, who at the time said, “All eyes are on the EU’s AI act, which has been in the works for a few years now. It is expected to be adopted early next year, which would mean a late 2025 or early 2026 date for it to come into effect.”

“All eyes are on the EU’s AI Act.” Anokhy Desai, Privacy Attorney | CIPP/US, CIPT, CIPM, CIPP/C, CIPP/E, FIP

Now partially in force, the law mainly outlines transparency requirements for GenAI and places the technology into different risk levels.

Desai adds, “The AI Act itself takes a risk-based approach to regulating AI, and a recent provision added to the AI act would require ‘companies deploying generative AI tools, such as ChatGPT… to disclose any copyrighted material used to develop their systems’.”

However, the EU AI Act does not dictate what information can be used for training purposes.

“There is no word yet of whether future amendments, carveouts, or rules will delineate acceptable types of publicly available data.” says Desai, “By nature of being public, there is no retroactive privacy added to that data.”

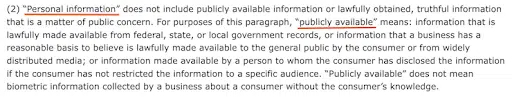

Privacy Laws in the US

In the US, some states have taken a different approach to publicly available data, quite the opposite of the regulations in the EU.

Desai says, “Among the state-level privacy laws that have gone into effect this year, a handful include provisions about AI, like the CPRA’s limitations on data retention, data sharing, and use of sensitive personal information with AI.”

But the following state laws have excluded publicly available data from the definition of personal information and, therefore, from the application and scope of these laws:

- California Consumer Privacy Act (CCPA)

- Virginia Consumer Data Protection Act (VCDPA)

- Colorado Privacy Act (CPA)

Take the CCPA as amended by the CPRA as an example and read how it defines “personal information” and “publicly available” in part below.

As a result, publicly available data doesn’t fall under the CCPA’s requirement of notice nor under the consumer rights to deletion and opting out of sales and sharing.

“While none of these laws mention the use of publicly available data in training AI models,” says Desai, “the exponential increase in proposed AI legislation points to the potential for such a bill to be proposed in the future.”

The lack of protections around public data may explain recent controversies over some companies relying on this information to train AI models without obtaining any form of consent or other lawful basis.

For example, in August 2023, Zoom was at the center of a controversy for its Terms of Service clauses on using personal information to train machine learning and AI models.

Similarly, OpenAI, Microsoft, and Google also face class action lawsuits in the US for their data scraping practices for AI model training.

Can Social Media Platforms Use Your Data To Train AI?

As we’ve seen, social media constitutes an immense pool of data — public or not — and can be a resource for AI model training.

Generally speaking, whether data is public or private depends on the privacy settings a user chooses on the platform they’re using.

Different platforms have unique scopes to what can be public and varying approaches to how others can use this data to train AI models.

Let’s cover some of the popular platforms briefly in the next section.

Facebook & Instagram

Facebook and Instagram fall under the scope of Meta’s policies regarding public data.

Firstly, yes, both platforms use publicly available data from their platform to train Meta’s AI, as recently reported by Meta President of Global Affairs, Nick Clegg.

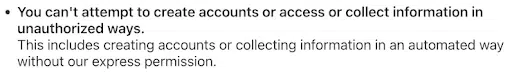

Regarding collecting public data by external parties for AI model training, both platforms’ Terms of Use do not allow it without their express permission — which we can only suppose they do not grant to competitors.

Below, you can see Facebook’s Data Collection Terms.

In the following screenshot, you can read Instagram’s Terms.

X/Twitter

Similarly to Meta, the Terms from X (formerly Twitter) mentions using publicly available data to train the companies’ machine learning models, as reported by Techcrunch.

Additionally, during the launch of xAI, Elon Musk declared that they’ll use public tweets to train his new AI.

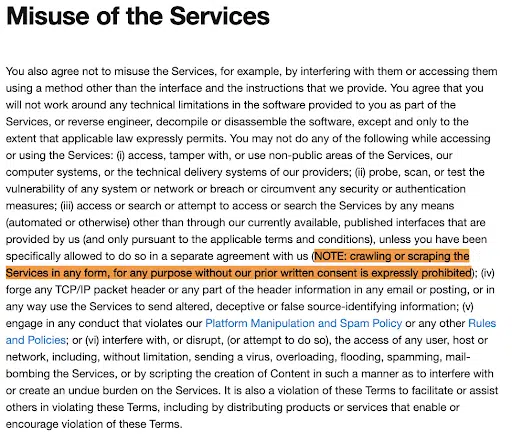

Regarding giving external parties access to the platform’s public data, X specifically updated its terms to ban any crawling or scraping to prevent AI training.

You can see those terms in the screenshot below.

Summary

AI model training has recently raised much concern for its immense appetite for data — including personal data.

But, tapping into publicly available information has become the norm for AI developers because privacy laws are more lenient regarding this type of data.

However, recent events indicate that regulators, the public, and AI companies have yet to build common ground to grow more trustworthy AI training practices, including:

- Clearview AI’s sanction for illegally collecting public pictures

- The class action lawsuits against major AI players

- Negative public response to Zoom’s terms of service in the summer of 2023

A first step towards this trust might be the ratification and implementation of the EU AI Act, which European institutions finalized in 2024.

Regardless, personal data must remain protected following applicable laws, and we must continue to educate ourselves on public data and how it gets used.