AI technology is so new that the legal landscape is still developing and evolving. This apparent lack of technical guidelines can put businesses at risk.

According to the American Bar Association, court cases involving AI have significantly increased, bringing to light important questions, like if and how these tools fall under the scope of data privacy laws.

To help your business learn how to use AI technology responsibly and in-line with privacy laws, here’s a list of best practices that may help save you a lot of time and hassle down the road.

Data Privacy and AI

No matter how you slice it, AI and data privacy are intrinsically related.

AI relies on gigantic datasets to function properly, and some of that data is likely going to fall under the broad legal definition of personal data, or personal information, is broadly defined as:

“… any information relating to or identifying a specific person or household or persons, such as names, address, IP address, emails, birthdates, etc.”

But the law moves at a much slower pace than technology. The AI legal landscape is still developing, as are the potential consequences.

Right now, there is no one-size-fits-all list of requirements for all entities to easily follow. Because of this, businesses are caught between a rock and a hard place.

How can your business take advantage of using these exciting AI tools without facing future legal backlash or other repercussions?

How To Find Responsible AI Tools & Platforms

Here are my suggestions for finding responsible third-party AI tools and platforms, which I expand upon in detail in the next section:

- Prioritize AI platforms that comply with existing data privacy laws. Specifically, ones that prioritize user individual privacy like the General Data Protection Regulation and the California Consumer Privacy Act.

- Vet the security and safety of the AI platforms your business uses. Data shows that cybersecurity risks and crimes continue to increase, and global cybercrime damage costs are predicted to reach $10.5 trillion annually by the end of this year.

- Prioritize using AI tools that build user privacy in every part of their operations. This means actually reading through the entities’ legal policies, including privacy and cookie policies and terms and conditions agreements.

Let’s discuss each of these in a bit more detail.

Responsible AI Aligns with Data Privacy Laws

When choosing AI tools, look for ones that claim to comply with data privacy laws.

For example, you might look for AI features from Customer Relationship Management (CRM) solutions that comply with the GDPR, the CCPA, and the EU AI Act.

Why? These laws outline requirements that protect the integrity and security of consumer personal information. This adds a layer of protection for your business and its consumers.

How To Verify?

Read through the privacy policy of any AI platform your business is considering using.

This will tell you all about how they use personal information, including the information collected about your customers, should you choose to use their tool.

It should also tell you what laws they’re subject to following. For example, they might have CCPA or GDPR specific clauses listing the various rights users can follow through on.

If your business is subject to following specific laws, you might even use AI tools that are also subject to those same pieces of legislation.

Responsible AI Prioritizes Safety and Security

Cyber risks have increased, as predicted by the experts over the last few years and highlighted by recent cybersecurity statistics.

The costs associated with experiencing a cyber-crime have also gone up.

This is why it’s important to look at the security practices outlined by any AI platforms or other third-party entities introducing your business to an AI-based tool.

These bad actors no longer target just the big guys. They frequently go after individuals, small businesses, educational organizations and nonprofits, and more.

How To Verify?

To verify the safety and security of an AI platform or third-party service, look through their terms of service agreement and any available end-user license agreements.

They should have several clauses highlighting their security practices. Read through these carefully, and look for the following positive signs:

- Mentions of security practices in place to protect data

- Details about how often the security practices are updated and verified

- A plan of action just in case a privacy breach or incident occurs

- Contact information so consumers can reach the company if they suspect their information was breached.

Responsible AI Prioritizes User Privacy

Try to find AI tools that promise to respect user personal information, privacy laws, and data security, and that prioritized building user privacy into the foundation of their platform.

For example, Browser platforms like Brave’s Leo and DuckDuckGo’s AI Chat enable users to use AI search without conversation tracking.

Other platforms, like CustomGPT.ai, help businesses build custom AI assistants trained on internal materials and resources without sharing any data.

How To Verify?

When choosing AI tools for your business, you can learn a lot about their data practices and AI policies by reading their Privacy Policy and Terms of Service Agreement.

They should have clearly written clauses explaining exactly what data they.

If this information is missing from the policy (or worse, if the company doesn’t even have a privacy policy), it might be safest to consider other options.

Data Privacy Best Practices When Using AI

Next, are some best practices businesses can keep in mind when using AI tools, especially those that plan to share consumer personal data with these platforms.

Be Transparent About Your Use of AI

It’s important that you are honest with your users about how your business uses AI, including informing them about any third-party tools you rely on.

For example, here are a few ways you can be transparent about your use of AI, which will help show your site visitors that you are honest and trustworthy:

- Clearly state if and when AI was used across your website to generate content, including images, videos, voice-over, or any other type of material.

- Clearly state in your privacy policy what AI platforms you use, if you share data with that platform, what data is shared, and how your users can opt out of the sharing.

This might even be a legal requirement for your business, depending on what data privacy laws apply to you and your consumers.

Limit Data Collection and Sharing

When using AI tools, be mindful to limit how much data you collect and share with it.

A good mindset to adopt is to only share data when it’s absolutely necessary to achieve a specific purpose. That purpose should be stated clearly in your business’s privacy policy.

For example, if you want to analyze the results of a customer survey by inputting the data into an AI platform, consider removing all personally identifiable information, like any customer names, email addresses, birth dates, or other details.

Again, this is a requirement under laws like the GDPR, so if it applies to your business, you legally must minimize your data collection.

Obtain User Consent Before Sharing Data

If your business wants to share any consumer data with an AI tool for any reason, it’s important to ensure you first obtain proper consent from the user, especially if they’re protected by any data privacy laws.

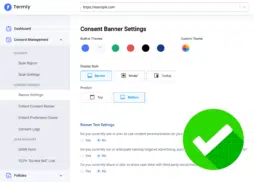

For example, you can add a consent banner to your page with a link to a cookie policy that clearly mentions any cookies or trackers your site uses that are related to AI tools or platforms.

Label these cookies properly and allow your users to choose if they want to specifically accept those cookies or not. You should also mention this data sharing explicitly in a clause in your privacy policy.

Ensure Your Users Can Follow Through on Their Privacy Rights

It’s vital that your users are able to properly follow through on their privacy rights, including any requests made regarding data that was shared with an AI tool.

While the specific consumer rights vary depending on the law, most outline the following:

- The right to access their data

- The right to correct their data,

- The right to delete their data,

- The right to amend their data,

- The right to cease or limit data collection

- The right to data portability

Imagine If one of your users in Europe protected by the GDPR requests to have their information you shared with an AI tool corrected or deleted.

You need to have a method in place for reasonably following through on these requests, or you could risk facing penalties for violating privacy laws.

How Termly Can Help You Communicate Your AI Policies with Your Users

Termly’s suite of consent solutions and policy generators can also help your business transparently inform your users about your AI policies.

Our legally backed Privacy Policy Generator enables you to add an AI clause to your policy and asks about what platforms your business uses.

It features a drop-down checklist of common third-party platforms as well as the option to input your own tools.

It’s easy to use, affordable, and helps ensure you’re keeping your users properly informed about AI and their personal data privacy.

Reviewed by Masha Komnenic CIPP/E, CIPM, CIPT, FIP Director of Global Privacy